Anthropic Claude 是一系列基础 AI 模型,可用于多种应用。开发者和企业可利用 API 访问权限,直接在 Anthropic 的 AI 基础设施上构建。

Spring AI 支持 Anthropic 消息 API,用于同步和流式文本生成。

Anthropic 的 Claude 模型也可通过 Amazon Bedrock Converse 使用。Spring AI 同时提供专用的 Amazon Bedrock Converse Anthropic 客户端实现。

先决条件

您需要在 Anthropic 门户创建 API 密钥。

在 Anthropic API 仪表板创建账户,并在获取 API 密钥页面生成 API 密钥。

Spring AI 项目定义了名为 spring.ai.anthropic.api-key 的配置属性,应设置为从 anthropic.com 获取的 API 密钥值。

您可在 application.properties 文件中设置此配置属性:

spring.ai.anthropic.api-key=<您的-anthropic-api密钥>

为增强处理敏感信息(如 API 密钥)时的安全性,可使用 Spring 表达式语言(SpEL)引用自定义环境变量:

在 application.yml 中

spring:

ai:

anthropic:

api-key: ${ANTHROPIC_API_KEY}在您的环境或 .env 文件中

export ANTHROPIC_API_KEY=<您的-anthropic-api密钥>

也可在应用程序代码中以编程方式设置此配置:

// 从安全源或环境变量检索 API 密钥

String apiKey = System.getenv("ANTHROPIC_API_KEY");

添加仓库和 BOM

Spring AI 构件发布在 Maven Central 和 Spring Snapshot 仓库。参考构件仓库部分将这些仓库添加到构建系统。

为帮助依赖管理,Spring AI 提供 BOM(材料清单)确保整个项目使用一致的 Spring AI 版本。参考依赖管理部分将 Spring AI BOM 添加到构建系统。

自动配置

Spring AI 自动配置和 starter 模块的构件名称已发生重大变更。请参考升级说明获取更多信息。

Spring AI 为 Anthropic 聊天客户端提供 Spring Boot 自动配置。要启用它,请将以下依赖项添加到项目的 Maven pom.xml 或 Gradle build.gradle 文件:

Maven

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-anthropic</artifactId>

</dependency>Gradle

dependencies {

implementation 'org.springframework.ai:spring-ai-starter-model-anthropic'

}

参考依赖管理部分将 Spring AI BOM 添加到构建文件。

聊天属性

重试属性

前缀 spring.ai.retry 用作属性前缀,用于配置 Anthropic 聊天模型的重试机制。

当前重试策略不适用于流式 API。

连接属性

前缀 spring.ai.anthropic 用作属性前缀,用于连接到 Anthropic。

配置属性

聊天自动配置的启用和禁用现在通过顶级属性配置,前缀为 spring.ai.model.chat。

启用:spring.ai.model.chat=anthropic(默认启用)

禁用:spring.ai.model.chat=none(或任何不匹配 anthropic 的值)

此变更允许配置多个模型。

前缀 spring.ai.anthropic.chat 是配置 Anthropic 聊天模型实现的属性前缀。

所有以 spring.ai.anthropic.chat.options 为前缀的属性可在运行时通过向 Prompt 调用添加请求特定的运行时选项来覆盖。

运行时选项

AnthropicChatOptions.java 提供模型配置,如使用的模型、温度、最大令牌数等。

启动时,可通过 AnthropicChatModel(api, options) 构造函数或 spring.ai.anthropic.chat.options.* 属性配置默认选项。

运行时可通过在 Prompt 调用中添加新的请求特定选项来覆盖默认选项。例如为特定请求覆盖默认模型和温度:

ChatResponse response = chatModel.call(

new Prompt(

"Generate the names of 5 famous pirates.",

AnthropicChatOptions.builder()

.model("claude-3-7-sonnet-latest")

.temperature(0.4)

.build()

));除模型特定的 AnthropicChatOptions 外,您还可使用通过 ChatOptionsBuilder#builder() 创建的可移植 ChatOptions 实例。

Tool/Function Calling

您可向 AnthropicChatModel 注册自定义 Java 工具,让 Anthropic Claude 模型智能选择输出包含参数的 JSON 对象来调用一个或多个注册函数。这是将 LLM 能力与外部工具和 API 连接的强大技术。阅读更多关于工具调用的信息。

多模态

多模态指模型同时理解和处理多种来源信息的能力,包括文本、pdf、图像、数据格式。

图像

当前,Anthropic Claude 3 支持图像的 base64 源类型,以及 image/jpeg、image/png、image/gif 和 image/webp 媒体类型。查看视觉指南获取更多信息。Anthropic Claude 3.5 Sonnet 还支持 application/pdf 文件的 pdf 源类型。

Spring AI 的 Message 接口通过引入 Media 类型支持多模态 AI 模型。此类型包含消息中媒体附件的数据和信息,使用 Spring 的 org.springframework.util.MimeType 和用于原始媒体数据的 java.lang.Object。

以下是 AnthropicChatModelIT.java 中提取的简单代码示例,演示用户文本与图像的组合。

var imageData = new ClassPathResource("/multimodal.test.png");

var userMessage = new UserMessage("Explain what do you see on this picture?",

List.of(new Media(MimeTypeUtils.IMAGE_PNG, this.imageData)));

ChatResponse response = chatModel.call(new Prompt(List.of(this.userMessage)));

logger.info(response.getResult().getOutput().getContent());它以 multimodal.test.png 图像作为输入:

附带文本消息"解释你在这张图片中看到了什么?",并生成类似响应:

The image shows a close-up view of a wire fruit basket containing several pieces of fruit.

...

PDF

从 Sonnet 3.5 开始提供 PDF 支持(测试版)。使用 application/pdf 媒体类型将 PDF 文件附加到消息:

var pdfData = new ClassPathResource("/spring-ai-reference-overview.pdf");

var userMessage = new UserMessage(

"You are a very professional document summarization specialist. Please summarize the given document.",

List.of(new Media(new MimeType("application", "pdf"), pdfData)));

var response = this.chatModel.call(new Prompt(List.of(userMessage)));Sample Controller

创建新的 Spring Boot 项目,并将 spring-ai-starter-model-anthropic 添加到您的 pom(或 gradle)依赖项。

在 src/main/resources 目录下添加 application.properties 文件,以启用和配置 Anthropic 聊天模型:

spring.ai.anthropic.api-key=YOUR_API_KEY

spring.ai.anthropic.chat.options.model=claude-3-5-sonnet-latest

spring.ai.anthropic.chat.options.temperature=0.7

spring.ai.anthropic.chat.options.max-tokens=450

将 api-key 替换为您的 Anthropic 凭证。

这将创建 AnthropicChatModel 实现,您可将其注入到您的类中。以下是使用聊天模型进行文本生成的简单 @Controller 类示例。

@RestController

public class ChatController {

private final AnthropicChatModel chatModel;

@Autowired

public ChatController(AnthropicChatModel chatModel) {

this.chatModel = chatModel;

}

@GetMapping("/ai/generate")

public Map generate(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

return Map.of("generation", this.chatModel.call(message));

}

@GetMapping("/ai/generateStream")

public Flux<ChatResponse> generateStream(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

Prompt prompt = new Prompt(new UserMessage(message));

return this.chatModel.stream(prompt);

}

}

手动配置

AnthropicChatModel 实现 ChatModel 和 StreamingChatModel,并使用低级 AnthropicApi 客户端连接到 Anthropic 服务。

将 spring-ai-anthropic 依赖项添加到项目的 Maven pom.xml 文件:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-anthropic</artifactId>

</dependency>Gradle

dependencies {

implementation 'org.springframework.ai:spring-ai-anthropic'

}

参考依赖管理部分将 Spring AI BOM 添加到构建文件。

接下来,创建 AnthropicChatModel 并将其用于文本生成:

var anthropicApi = new AnthropicApi(System.getenv("ANTHROPIC_API_KEY"));

var chatModel = new AnthropicChatModel(this.anthropicApi,

AnthropicChatOptions.builder()

.model("claude-3-opus-20240229")

.temperature(0.4)

.maxTokens(200)

.build());

ChatResponse response = this.chatModel.call(

new Prompt("Generate the names of 5 famous pirates."));

// Or with streaming responses

Flux<ChatResponse> response = this.chatModel.stream(

new Prompt("Generate the names of 5 famous pirates."));AnthropicChatOptions 为聊天请求提供配置信息。AnthropicChatOptions.Builder 是流畅的选项构建器。

低级 AnthropicApi 客户端

AnthropicApi 是 Anthropic 消息 API 的轻量级 Java 客户端。

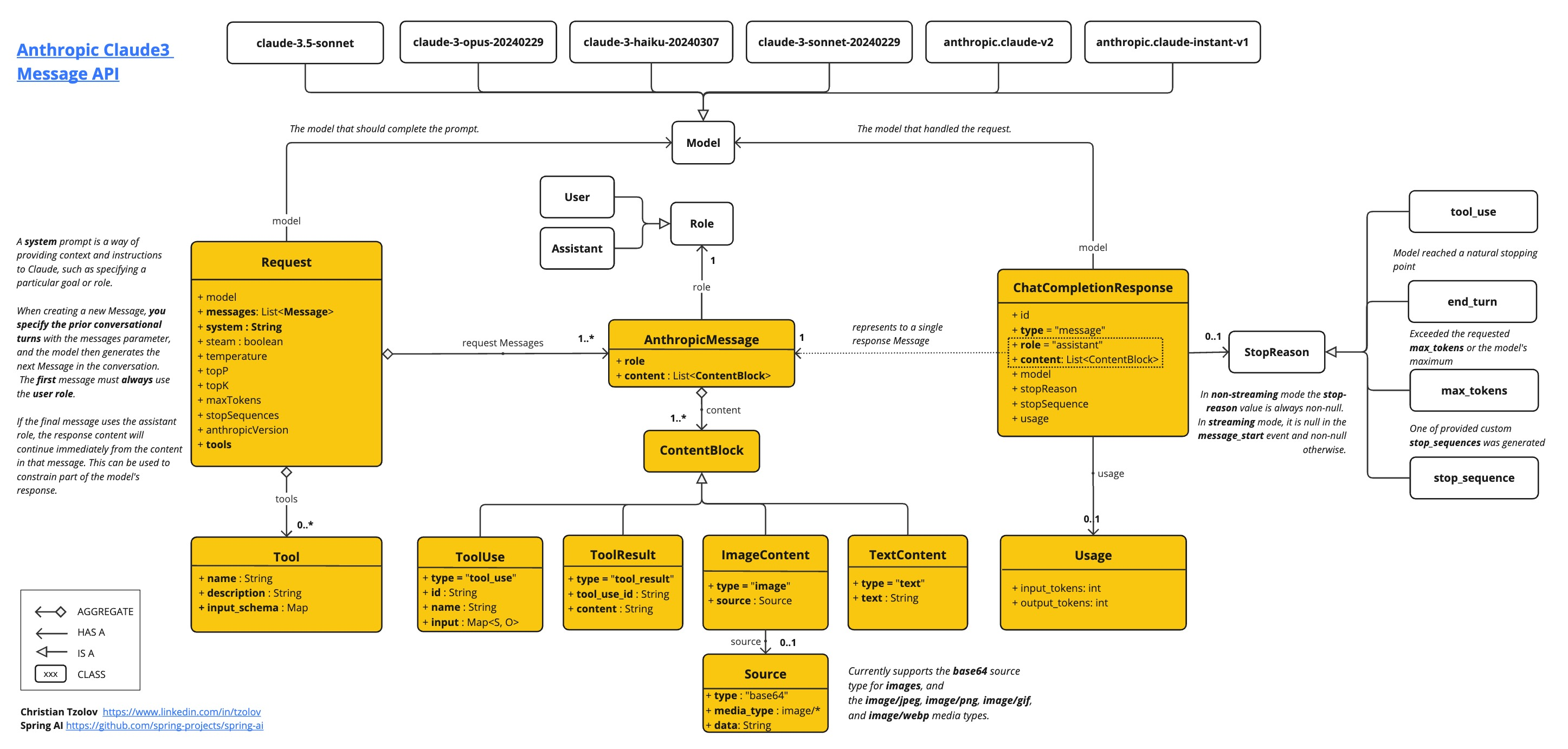

以下类图说明 AnthropicApi 聊天接口和构建块:

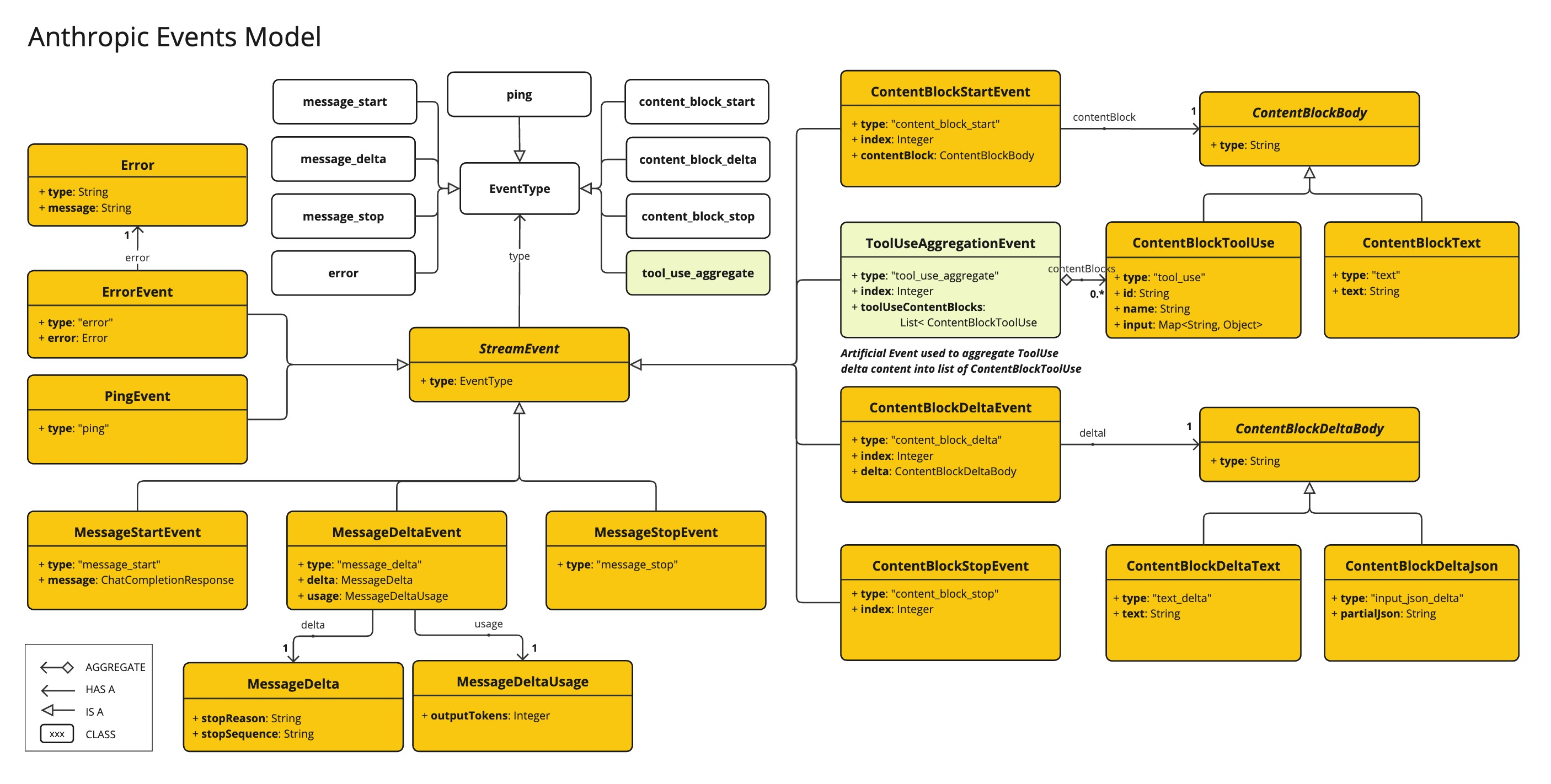

AnthropicApi 事件模型

以下是编程使用 API 的简单片段:

AnthropicApi anthropicApi =

new AnthropicApi(System.getenv("ANTHROPIC_API_KEY"));

AnthropicMessage chatCompletionMessage = new AnthropicMessage(

List.of(new ContentBlock("Tell me a Joke?")), Role.USER);

// Sync request

ResponseEntity<ChatCompletionResponse> response = this.anthropicApi

.chatCompletionEntity(new ChatCompletionRequest(AnthropicApi.ChatModel.CLAUDE_3_OPUS.getValue(),

List.of(this.chatCompletionMessage), null, 100, 0.8, false));

// Streaming request

Flux<StreamResponse> response = this.anthropicApi

.chatCompletionStream(new ChatCompletionRequest(AnthropicApi.ChatModel.CLAUDE_3_OPUS.getValue(),

List.of(this.chatCompletionMessage), null, 100, 0.8, true));遵循 AnthropicApi.java 的 JavaDoc 获取更多信息。

低级 API 示例

AnthropicApiIT.java 测试提供了一些使用轻量级库的通用示例。

评论区